The Latest from TechCrunch |  |

- Fly Or Die: Google Nexus 7

- Surprisingly Good Evidence That Real Name Policies Fail To Improve Comments

- From Information to Understanding: Moving Beyond Search In The Age Of Siri

- Vertical Is The New Horizontal: How The Cloud Makes Domain Expertise More Valuable In The Enterprise

- A Few Good Rounds: Trends In Venture Capital Over The Last 12 Years

- Let’s Not Get Too Excited About Google Fiber… Yet

- Move Over, Pebble: MetaWatch’s New ‘Strata’ Aims To Make A Splash On Kickstarter Too

- Please Don’t Watch NBC Tonight. Or Any Night.

- BlogFrog Shows The Power of Women Bloggers But Trust Critical As Influencer Marketing Programs Rise In Popularity

- Bitly Announces Realtime, A Search Engine For Trending Links

- Stranded Vessels

- Kickstarter: Meet The Vers 1Q, A Stunning 2-inch Battery-Powered Bluetooth Speaker

- TechCrunch PSA: Olwimpics Blocker Blocks The Olympics

- Gillmor Gang: London Calling

| Posted: 29 Jul 2012 07:00 AM PDT  Ever since I/O and the unveiling of Android 4.1 Jelly Bean, the blogosphere has been measuring Google’s new Nexus 7 tablet. The verdict in almost every case is good, including our very own iPad lover’s take. John and I thus found it only fitting to bring the little 7-inch tablet into the studio for Fly or Die. The tablet, with a 7-inch IPS 1280×800 display, a Tegra 3 quad-core processor, and the latest version of Android, didn’t fail to impress. And the specs have very little to do with it. Sure, that Tegra 3 proc probably contributes quite a bit to the snappiness of the tablet, but the display isn’t anything to write home about, nor is the measly 8GB of on board storage. (I know there’s a 16Gb model, but without external memory you’ll be walking around with a rather “light” amount of content.) What makes the tablet great is that it’s the first truly premium tablet available in this size and price range. Amazon’s Kindle Fire is great but it isn’t really a computer replacement the way the iPad is, but rather a mobile Amazon content portal. And the iPad, though exceptional in performance and usability, is a little large and way too expensive to fit into the category. The Nexus 7 fills that gap, and so we believe it’ll fly and fly into many a home. It’s already sold out once. The real question is whether or not it’ll still have such high demand once Apple unveils that long-awaited iPad mini. September will be here before you know it. |

| Surprisingly Good Evidence That Real Name Policies Fail To Improve Comments Posted: 29 Jul 2012 04:00 AM PDT YouTube has joined a growing list of social media companies who think that forcing users to use their real names will make comment sections less of a trolling wasteland, but there’s surprisingly good evidence from South Korea that real name policies fail at cleaning up comments. In 2007, South Korea temporarily mandated that all websites with over 100,000 viewers require real names, but scraped it after it was found to be ineffective at cleaning up abusive and malicious comments (the policy reduced unwanted comments by an estimated .09%). We don’t know how this hidden gem of evidence skipped the national debate on real identities, but it’s an important lesson for YouTube, Facebook and Google, who have assumed that fear of judgement will change online behavior for the better. Last week, YouTube began a policy of prompting users to sign in through Google+ with their full names. If users decline, they have to give a valid reason, like, “My channel is for a show or character”. The policy is part of Google’s larger effort to bring authentic identity to their social media ecosystem, siding with companies like Facebook, who have long assumed that transparency induces better behavior. "I think anonymity on the Internet has to go away," argued former Facebook Marketing Director, Randi Zuckerberg. "People behave a lot better when they have their real names down. … I think people hide behind anonymity and they feel like they can say whatever they want behind closed doors." For years, the national discussion has gone up and back, between critics who say that anonymity is a fundamental right of privacy and necessary for political dissidents, and social networks who worry about online bullying and impact that trolls have on their community. Enough theorizing, there’s actually good evidence to inform the debate. For 4 years, Koreans enacted increasingly stiff real-name commenting laws, first for political websites in 2003, then for all websites receiving more than 300,000 viewers in 2007, and was finally tightened to 100,000 viewers a year later after online slander was cited in the suicide of a national figure. The policy, however, was ditched shortly after a Korean Communications Commission study found that it only decreased malicious comments by 0.9%. Korean sites were also inundated by hackers, presumably after valuable identities. Further analysis by Carnegie Mellon’s Daegon Cho and Alessandro Acquisti, found that the policy actually increased the frequency of expletives in comments for some user demographics. While the policy reduced swearing and “anti-normative” behavior at the aggregate level by as much as 30%, individual users were not dismayed. “Light users”, who posted 1 or 2 comments, were most affected by the law, but “heavy” ones (11-16+ comments ) didn’t seem to mind. Given that the Commission estimates that only 13% of comments are malicious, a mere 30% reduction only seems to clean up the muddied waters of comment systems a depressingly negligent amount. The finding isn’t surprising” social science researchers have long known that eventually begin to ignore cameras video taping their behavior. In other words, the presence of some phantom judgmental audience doesn’t seem to make us better versions of ourselves. |

| From Information to Understanding: Moving Beyond Search In The Age Of Siri Posted: 28 Jul 2012 11:00 PM PDT  Editor’s note: Nadav Gur is the founder and CEO of Desti, a virtual personal assistant for travel incubated by SRI. Previously, he was founder and CEO of Worldmate, the first mobile travel app. "Any fool can know. The point is to understand." –Albert Einstein Since the launch of Siri on the iPhone 4S last year, the media has been abuzz with the potential implications of what's next – from Google's Eric Schmidt commenting that Siri poses a great threat to Google, to countless articles by VCs and thought leaders. Has artificial intelligence finally come of age? And is it ready for broader applications in industries ranging from travel to finance? Are we destined to grapple with fast-following Siri clone after Siri clone, or will the category evolve? Siri excels at setting reminders (and a little less so at ordering Scottish lunches). But is she ultimately more than a better front-end for basic smartphone functions? This post is about changing how we use computers to manage knowledge, and not just information. Knowledge = Information + Meaning You may "know" that it's 100 degrees out there today, but unless you also know that 100 degrees equates to meaning that it's hot, that piece of information is pretty useless. To understand the concept of "100 degrees", one has to know the meaning of 100 degrees and the concept of hot. That sophistication is called domain knowledge. Domain knowledge allows one to give meaning to information. As a result, the value one gets from knowledge helps make better decisions. The cashmere sweater stays home today. With knowledge comes understanding, which helps users make better choices. Better choices mean fewer mistakes, and an increase in productivity. Better Search Through Understanding This focus on meaning is the foundation of semantics and semantic search (an oft-abused term), which ultimately means searching for concepts, not keywords. Over the last 10+ years we've all been conditioned to expect search engines to basically match keywords to documents. We then receive a list of a gazillion links of pages that include some permutation of our keywords. It is then the user's job to manually sift through all of these listings in the hope that one of them is a good match. It goes without saying that this is a lot of work. A semantic search engine, on the other hand, would attempt to understand what we're looking for, and then retrieve the best results, whether or not the specific words we used are mentioned or not. The real promise of this approach is that by understanding our intent, we will get more relevant and more accurate results. Part 1 is to understand what the user is asking for, and part 2 is to understand what is discussed on a particular page. Siri has made strong headway into literally understanding you (voice to text) but more importantly about deriving meaning from what a user has just said. The Holy Grail is to take this ability to understand what people asked for and to also understand what's written across the billions of pages comprising the Internet. Instead of "organizing the world's information" you'd be organizing the world's knowledge. Understanding what people are asking or saying and converting it into usable meaning is a huge first step towards making heads or tails out of the billions of pieces of information scattered across the web. The traditional way of interfacing with information, whether with keywords or by refining searches with sliders, menus, or widgets just doesn't cut it anymore. To truly unleash the power of search is to use natural language, in voice to text or even text to text. With this new paradigm of starting a search we will be able to better unlock the value that is hidden in unstructured data and provide infinitely better results. This is why the natural language front end of Siri, a forerunner in the category, is such an existential threat to Google (or at least their existing keyword-based search). Google understands this better than anyone else, and their recent announcements of the forthcoming Google Semantic Search is a direct response. The Rise of Vertical VPA's The immediate next step is to help systems develop domain expertise, and this is no easy task. Even for the brightest people domain knowledge is something that can take decades to achieve, and even then people can't be a true expert on everything. Such is at the core of advancing us beyond the search paradigm of today's digital information to an age of Virtual Personal Assistants. SRI, who of course developed Siri from decades-long research on the CALO project, understands that for VPAs to be truly useful, they need to be focused on a specific domain of knowledge. SRI actually has developed that full core technology and begun systematically applying it to specific veriticals. It was recently unveiled as the foundation underlying BBVA's Lola, a virtual personal assistant for banking. My company Desti was likewise incubated at SRI, and is built on top of the same stack. We're creating a trusted advisor who can take a user's travel intent and provide directly relevant answers and actions, all tailored specifically to that individual. According to Google, the average user visits 22 sites prior to booking travel. Planning a trip can be unnecessarily frustrating and time consuming. What if planning your travel was as easy as interacting with a friend from the area you plan to visit, one whose on-the-ground recommendations cut through the clutter with aplomb, and who knows what you enjoy? SRI's overall VPA play is our well-reasoned effort to apply the Siri concept in a way that enables it to be adapted to specific domains or verticals, encapsulating the specific language, knowledge and reasoning that are unique to each. Instead of teaching an assistant to be all things to all people, we are teaching one virtual assistant to be a bank teller (Lola), another one to play educational games (Kuato), and yet another to be a travel agent. This is an extraordinarily difficult challenge, but one that is finally becoming a reality. The proof will be in the pudding of course — in how well we instill domain expertise in each new venture. Summary We want a VPA that understands us as individuals — that leverages our past for our present. A VPA has deep domain knowledge, and can finally move us beyond a big list of blue links. Software really can provide us with real and expert assistance when and where we need it most. What we're seeing is a paradigm shift on multiple levels, one that will play out more profoundly over the coming year than it did the last. We're on the cusp of an entirely new thing:

At the end of the day, what people really want is to be…understood. That starts with a conversation, and it gets better when that conversation begets a real relationship — one based on mutual respect, a shared language, and a certain intimacy. |

| Vertical Is The New Horizontal: How The Cloud Makes Domain Expertise More Valuable In The Enterprise Posted: 28 Jul 2012 07:21 PM PDT  Editor’s note: Gordon Ritter is a founder and general partner at Emergence Capital, focused on cloud companies. In the days before the cloud, on-premise software providers that focused on selling into a vertical market were considered second-class citizens to the "big guns" selling into the broader horizontal marketplace. The real "win"—in market share, wallet share and ultimately, profits—was the broadest approach. The notion of specializing in solutions that serve a market niche or specific industry was considered limited unless it was just the start of something more horizontal. However, with the advent of the SaaS model, the tables have turned. Focusing on niche verticals or specific functional areas may be one of the most successful strategies of the enterprise cloud software era. While there are still a few providers for whom the "all-things-to-all-people" approach is quite successful (Microsoft, Oracle), the vast majority of successful cloud solution providers have struck gold by picking one thing and doing it extremely well. Mass customization by vertical or functional slice with a purpose-built solution that meets your customer's unique needs is becoming the new key to success in the software industry. Why? The Obvious Benefits Some of the drivers are fairly obvious. Going vertical dramatically lowers customer acquisition costs (CAC). Refining your audience reduces the sheer number of potential targets, enabling you to reach customers more quickly with fewer sales people and increasing word-of-mouth recommendations, thus significantly improving sales and marketing efficiency. In most traditional models, CAC is typically equal to or twice the annual contract value. In other words, you might spend $100k to land the customer and see only half that in recurring revenue the first year. Many times it takes two years just to break even and three years to turn a profit. But, with vertical-focused companies in our portfolio, CAC can be as low as one-fourth of the annual contract value, delivering immediate ROI in the first year alone. Going vertical also enables you to capture a larger market share more quickly. With a horizontal solution, there's so much ground to cover, in both geography and business sizes, few can achieve more than 5 to 10 percent share. However, focusing on a specific vertical or functional area enables you to achieve much greater penetration—up to 60 percent share in some cases—and solidifies your solution as the industry standard. In a vertical, become the standard and the whole industry adopts your peer-approved and recommended solution. Capturing the lion's share of the market enables you to gobble an even larger piece of the pie. Case in point: In just four years, Veeva Systems has eclipsed Oracle as U.S. market share leader for life sciences CRM platforms, simply by specializing in the life sciences industry. Others have also garnered sizable share in their respective verticals: OpenTable claims 12 percent share of the North American market for seated diners and WebMD commands more than 40 percent of all web traffic in online health, according to Compete. New Benefits Emerging While lower cost and larger market share might be "old hat," new benefits of going vertical are emerging that may be even more powerful. Cloud platforms enable innovative companies to replace competing technologies or entire swaths of functionality in just a few quarters with an ultra-rapid deployment model, whereas such a feat would have taken years in the old days of on-premise, installed software. This unprecedented new paradigm allows companies to capture a much larger share of wallet and leverage the inherent data gathered to deliver impressive value-added analysis. Delivering Layers of Value The focused approach enables you to garner a larger share of wallet by deploying software modules tuned for both the taxonomy and regulatory issues of a given vertical. As new customer needs come to light, the unique infrastructure of the cloud model makes it much quicker, easier and less expensive to develop and deploy new modules of value, offering new layers of functionality specially tuned for the given industry. Veeva (an Emergence portfolio copmany) has mastered this layer-cake approach, taking a slice of a number of horizontal solutions (i.e. CRM, content management, marketing automation), and focusing them specifically for the deep regulatory issues of the life sciences industry. In fact, in just one year, the company has twice rolled out entirely new functionality that entire companies were once built upon. Its Veeva Vault content management solution was quickly followed by its Veeva Network marketing and data platform—all built from scratch and deployed in the span of just 18 months. Another great example is RealPage (Ticker: RP), focused on property management solutions. With a market cap of $1.6B, the company has expanded into adjacent segments, including apartment complexes, student housing and senior communities among others. Leverage Data to Become an Invaluable Advisor One of the fundamental advantages of the cloud model is its ability to capture data at an unprecedented granular level. With an installed solution, you may know how many seats are provisioned on the system; with a SaaS solution, you know exactly how many active users there are, and more importantly exactly what they use (and don't use) on your platform. In the cloud, you can capture, archive and analyze user data, as well as virtually every other piece of data that comes into the system. This ability to analyze and draw correlations across various layers enables you to discover insights your customers may have never known existed. Social software provider Lithium Technologies (another Emergence company) leverages its depth of knowledge in building successful consumer communities to help its customers realize a "Community Health Index" (CHI), a detailed usage analysis that is highly predictive about whether a community will succeed or fail. Lithium has been able to streamline this process, automate the creation of CHI reports and bundle them with up-sell versions of its service to monetize this valuable data. Zillow (Ticker: Z) is another great example. While the company tracks every click to deliver a more tailored experience to its users, it also leverages the power of crowdsourcing to augment their data vault by allowing owners to add detailed information to their properties such as description, photos, etc. Leveraging data to help your customers succeed is a powerful and effective way to elevate your company from software vendor to invaluable advisor. This feat is much easier in a vertical or specific functional area. The taxonomy and "schemas" of the data are much easier to correlate than if you are working with, say, pharma companies and retailers on the same platform. Ultimately, this ability to extract hidden value from inputted and behavioral data is perhaps the most critical component to market success for any SaaS vendor. Serving in this C-level advisory capacity not only makes your solution more valuable to the customer but also enhances your overall position as a trusted business partner. As a result, demonstrating your value as a data analyst and advisor solidifies your role as a market expert—and the market leader. At Emergence Capital Partners, we've seen this focused approach drive customer acquisition costs down as much as 75 percent. That's a powerful incentive in a business where sales and marketing is often the largest cost of doing business. [Image via Delish.] |

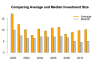

| A Few Good Rounds: Trends In Venture Capital Over The Last 12 Years Posted: 28 Jul 2012 05:00 PM PDT  Editor’s note: This is the second article in a series by Redpoint Ventures principal Tom Tunguz examining trends in the public and private technology markets. He recently discussed four trends in the public technology markets. Today, he compares the current state of the US venture capital to historical norms. First, we will examine the inflows into the venture capital market: dollars raised by venture capitalists. Then we will explore the outflows, VCs’ investment pace, contrasting the dollars deployed over time. Last, we will investigate market sentiment and consequent price fluctuations. Venture Dollars Raised Fall 27% Below 15 Year Trailing MedianThe venture capital industry is in the midst of a contraction. Since 2001, limited partners have invested a median of $22B each year in venture capital. Over the last 3 years, those figures have dropped by 50% to $16B annually. Venture Funds Are 14% SmallerThere are fewer firms investing less money from smaller funds in 2011 than on average over the past 12 years. In 2011, 182 venture firms raised funds, about 16% below the 12 year median. The average 2011 venture fund is 14% smaller than the 12 year median. However, there is increasing concentration of capital in well-known firms – a flight to quality phenomenon. Historically, the top 25 firms have raised about 30% of all venture dollars. During the first half of 2012, the top 10 firms raised 69% of all dollars, a tremendous concentration. These large funds skew average fund size upward suggesting smaller firms are disproportionately affected by decreasing fund sizes governed by a declining LP capital base. Venture Investing Pace Is Precisely Average

Investing pace cannot remain constant forever because venture capital inflows are declining. But this rate may be maintained for a few years. VCs raise funds for ten years and typically invest all the dollars raised within the first four years. The difference between dollars raised and invested is called the overhang. This overhang provides the extra capital to maintain the current investment pace. Investment Sizes Are Growing In Later StagesAlthough most pricing data is private, we can use median investment size as a proxy for pre-money valuations if we assume the ownership stake a VC takes in an investment has remained constant over time. Seed investment sizes have remained relatively constant over the past ten years, although the data is most sparse for this asset class since many investments are unreported. Series A prices have been declining steadily over the past 10 years at a rate of -5%, while Series B and Growth investments fell dramatically during the financial crisis. As public market prices fell, so did Series B and Growth valuations. However, these negative trends have reversed in the past year: Bs and Growth rounds have increased in size and are now both about 10% greater than the 12 year mean. These data contradict the market perception of ever-larger rounds at ever-better prices. A Tale of Two MarketsPerforming analyses on the median investment sizes masks the outliers who make headlines and consequently are the most visible indicators of the state of the fundraising market. The average dollars invested across all stages has risen by 25% since 2008, implying outliers raise money at sufficiently high prices to dramatically skew the average higher than the median. Much like the venture capitalists who invest in these companies, a few very high profile startups are raising disproportionate amounts of capital. Over the past 12 years the difference between average and median investment sizes have never been as great as the past 2 years. In 2010 and 2011, the average investment is twice as large as the median investment. These pricing trends describe a tale of two markets. A small number of brand name startups raise large, generously priced rounds from top investors with large funds skewing data upward. Meanwhile the rest of the venture capital market has contracted and investors are maintaining strong price discipline, investing in relatively smaller rounds at lower prices. Reversion to the MeanThe venture capital market is evolving. It’s not unreasonable to expect most of the metrics describing the venture capital industry to trend toward the mean: slight increases in dollars invested in venture capital, continuity for investment pace and capital deployed, steady valuations in early rounds but declining valuations in later rounds. Such a reversion make take time. After all, early 2012 data on the fundraising market indicates contracting trends are persisting. For now, at least, the market is in a Dickensian state. Data used in this analysis are publicly available through Dow Jones VentureSource and the NVCA |

| Let’s Not Get Too Excited About Google Fiber… Yet Posted: 28 Jul 2012 03:21 PM PDT  Earlier this week, Google provided details of its Google Fiber rollout in Kansas City. To hear some blogs tell it, it’s like the heavens will open from above and grant Kansas City blazing-fast Internet and competitive TV packages that will solve all the problems locals have with their current cable provider or ISP. But see, it’s not that easy. Google faces a number of challenges as it transitions to become an ISP. Here’s why Google’s grand experiment laying fiber might not be all the it’s cracked up to be. The rollout problemDon’t get me wrong — from a cost standpoint, Google Fiber sounds pretty amazing. It offer Gigabit speeds at an attractive price point, which other ISPs probably can’t compete with. And it would be great, if it were available today. But rolling out fiber is a complicated process, and most Kansas City residents anxious for some high-speed competition probably have a long wait ahead. Google is doing some interesting things in trying to expedite its fiber installations. It has separated the city into “fiberhoods” of 250-1,500 households a piece, and is asking potential subscribers to express their interest and pre-register, essentially reserving a spot in line when installations begin. Google will then use that interest data to determine which fiberhoods it will tackle first. All of that is designed to help concentrate installations close to one another, at least during its initial rollout, which has the potential to speed up the process. But it will still be a very, very slow process. On September 9, Google will determine its fiberhood rankings, and which reached the goal needed to get fiber installed. Once that’s done, Google has the arduous task of actually making installations. How long will that take? Let’s put it this way: Google estimates that it will be able to reach about 50 percent of fiberhoods by mid-2013, with its full rollout in Kansas City completed by the end of next year. That means residents who register today could wait as long as 18 months before they actual get fiber installed. The installation problemNow let’s talk about how installations will actually work. Most people today kind of take for granted the coax that they’ve had installed forever, even if they hate their current ISP. But ask anyone who transitioned from their local cable company to IPTV services from Verizon or AT&T over the last few years: installing fiber isn’t a simple process. Getting fiber to a new house or multi-tenant unit involves connecting fiber to the home, installing new equipment in the home, and running lines throughout the home. And let’s keep in mind, cable technicians — the people who actually do this stuff — aren’t just born. There’s all sorts of training that they go through to learn how to hook up and subsequently wire a home, and it’s doubtful that Google is just going to train a bunch of newly minted techs to take care of its high-profile fiber rollout. Nope, Google is going to recruit and hire already-trained techs away from its local cable competition. Which means that the current cable guy you complain about when he shows up late to an appointment is probably going to be the same guy who shows up to do your Google Fiber installation. That brings us to… The customer service problemGoogle has never had a great record of customer service, mainly because it’s never had to. Most of its services are powered by the Internet and take a web-centric view of customer relations. They put up a FAQ, create some automated feedback forms, and maybe answer the occasional email if things get really bad. Remember Google’s failed attempt at selling its Nexus One smartphone direct to consumers? It’s lack of customer service was a key reason it failed to get much traction in that attempt. The Wall Street Journal reports that Google has massively beefed up its phone customer service for its Google Play store and a new effort at direct device sales. But becoming an ISP is a very high-touch business. The biggest reason most people hate their current ISP or cable company isn’t that their Internet isn’t fast enough — it’s that the customer service consistently sucks. And it’s not clear how Google plans to offer a better alternative, especially in a field that it has consistently done a poor job in. The content problemAlong with its Google Fiber broadband business, the company is also offering a pay TV add-on, Google Fiber TV, that will offer up an alternative to local pay TV packages at a pretty competitive price. There are a lot of cool features — including a 2TB DVR and the ability to record up to eight programs at once. It also is fundamentally changing the way that users interactive with their TVs, by offering up the Nexus 7 as the standard TV remote. But there’s one thing that Google Fiber TV is missing — content. While it boasts more than 116 channels, the current offering is missing key networks like ESPN, Disney, AMC, TBS, TNT, HBO, and the like. I’ve spoken with some network people, and it appears that Google is negotiating with all of them, in an effort to get a more complete bundle together. It could be that this last piece ends up being a footnote, as it’s entirely possible that by the time the service gets real penetration, Google will have those other content providers on board. For now, though, Google still faces the problem of striking deals with more providers. That’s not to say that Google Fiber doesn’t sound awesome. But really, the devil is in the details. And Google faces a lot of logistical and operational challenges in the rollout. We’ll see how it handles them. Time Warner Cable truck photo courtesy of The Consumerist. |

| Move Over, Pebble: MetaWatch’s New ‘Strata’ Aims To Make A Splash On Kickstarter Too Posted: 28 Jul 2012 02:20 PM PDT  Sure, the Pebble has nabbed its share of headlines and accolades lately, but that doesn’t mean it’s got the nascent smart watch market all sewed up. Case in point: veteran MetaWatch recently pulled back the curtains on its new Strata smart watch, and it’s already picking up plenty of steam on (where else?) Kickstarter. Unlike some of the other smartwatch concepts that have been dreamed up in recent months, the Strata is the brainchild of a known quantity. MetaWatch has been tackling the problem of putting topical information on people’s wrists for nearly eight years now — the company’s roots lay with the clothing and accessory mavens at Fossil, which produced a pair of fashion-conscious smart timepieces in May 2011 before the team split off and formed their own company that August. Since then that team has been working on developer-oriented smart watches, but now they’re ready to bring the Strata to the masses. Conceptually, the Strata doesn’t stray too far from the models that preceded it. In short, the watch connects to a compatible iDevice or Android handset via Bluetooth and provides call information, text messages, and weather updates at a glance. Thanks to MetaWatch’s SDKs and open-sourced software developers can tap into the Strata with apps that live directly on handset it’s connected to. A few nifty add-ons like an integrated running app, music controls, and an alert that warns users when they’ve wandered away from their phones rounds out the (rather handsome) package. Where the Strata really bucks the trend it helped start is its strong focus on iOS support, and specifically support for iOS6. Take a look at the watch’s Kickstarter demo video to see what I mean — go ahead, I’ll wait. Yep, there’s nary a mention of Android to be found. That’s not to say that the Strata will leave Android users behind. MetaWatch’s earlier development units were meant to be used with Android devices, and the project’s description notes that the Strata already works with devices like the Galaxy Nexus. There’s no word yet on what other specific models the Strata will play nice with, but apparently most Android handsets running on 2.1 or later should do the job. Then again, that iOS push may be a savvier move than it appears at first glance. Huge consumer electronics companies like Motorola and Sony have thrown their gauntlets into the wrist-mounted display ring with devices that link up to their respective Android smartphones, with varying (and not very considerable) degrees of popularity. Apple’s hardware ecosystem on the other hand hasn’t yet played home to this sort of wearable device, and the Strata’s novelty and utility may be enough to inspire a new generation of iPhone-toting wrist-glancers. So far, the Strata’s Kickstarter campaign seems to be moving at a steady clip — the project only went live yesterday morning and at time of writing 361 backers have chipped in a total of $62,000 to help MetaWatch’s latest make the leap from prototype to product. If this sort of momentum keeps up, we should be looking at a fully-funded project before Monday rolls around, but with tremendous popularity comes tremendous pressure — the team behind the record-breaking Pebble smart watch recently announced that they wouldn’t be able to stick to their original September launch window. Coincidentally, MetaWatch also aims to push out its first Stratas to Kickstarter backers in September, and there’s word of a retail push in the works too. We’ll soon see if demand for this little guy reaches the same fever pitch that propelled the Pebble to the top of the Kickstarter charts, but for now you may want to lock one down before they’re all gone — a first-run Strata can be had for $159, while developer-oriented packages and special edition variants can cost as much as $299. |

| Please Don’t Watch NBC Tonight. Or Any Night. Posted: 28 Jul 2012 01:47 PM PDT  Spoiler alert: Phelps and Lochte raced today. The results are all over Twitter. But the race won’t air on TV in America until tonight. This is 2012, not 1996. NBC has put all of the events live online, provided you have a cable subscription, but won’t have them available recorded online and won’t air many events, including the most high-profile ones, until a primetime tape delay. This isn’t a new strategy, just a dumb, outdated one.

Sums it up pretty well. We’ve already covered the failings of NBC (and the IOC) fairly extensively, but its a topic that bears repeating. Check out #nbcfail for a live (gasp, what’s that?) stream of people’s frustrations with the peacock network. Now, I like to think that NBC isn’t completely outdated and stupid. They’re doing this for a reason: m-o-n-e-y. NBC can make more money with its current strategy than it could with a strategy that’s better for the viewers. And it isn’t just broadcasting a run of the mill sports event branded by a for-profit sports league. NBC is benefitting from Team USA branding and national pride, and is using that to fuck people over for better ratings. So if you’re cool with watching tape delays, having commercials during soccer games and more, then by all means watch NBC tonight and give them good ratings.But if people really want this to change, especially for future Olympics, World Cups and more, then you need to not watch. Scour the internet for bootleg streams, most of which are live; or if you must watch the games tonight, go to a bar where the games will already be on. Don’t contribute to the ratings. Or you can cave in and watch and we’ll all suffer through tape delays like Nicolas Cage in, well, every movie ever. |

| Posted: 28 Jul 2012 01:04 PM PDT  It’s of note to mention that BlogFrog has developed a platform that would not be possible without women bloggers. The newly available platform has a network of 100,000 “social influencers.” Women represent 95% of that community. These are women who write about parenting, food, health, fashion and home and garden. It’s with this network of women bloggers that BlogFrog has built what it calls an end-to-end influencer marketing platform that brands use to develop social marketing campaigns that connect blogs, brand sites and their properties on social networks. On BlogFrog, bloggers are tracked and measured according to their social media influence. BlogFrog’s technology platform tracks the posts that bloggers craft. Comments are managed through a BlogFrog widget that allows moderation and distribution of reader comments. Blog posts and reader comments can then be distributed to the customer’s own web page which is usually a branded asset of some kind that is marketing consumer products. Customers of BlogFrog’s software as a service (SaaS) get reporting on the campaigns through a realtime dashboard that tracks impressions, unique visitors, reach, links, social actions (votes and likes), replies, clicks, and shares. Shifts in society have forced marketers to interact differently than they did in an age when there was no online medium for women to express themselves. As noted in a recent BlogHer study, women bloggers say writing a blog gives them a fuller sense of self. That’s true of many bloggers I know. If you believe what you write then it is only natural for it to have an impact on the way you view yourself. What these women bloggers write is not lost on people who read these blogs. They relate to the way bloggers express themselves. According to BlogHer, 98% of women surveyed said they trust the information that they get from blogs. BlogFrog is banking on three converging trends that puts the blogger in a central role as influencer but also as a sort of brand ambassador:

Influencer marketing is a hot trend. Services like Klout, Traacker and SocMetrics are examples of companies that have emerged in the space. I am a bit skeptical of influencer marketing. It is a form of pay-to-play, which has been on the rise. It leads to questions about the independence of the blogger. Are they writing for their community or the brand? Bloggers can get paid very well. BlogFrog says it has paid out about $500,000 to bloggers this year. That’s a lot of money. And the purse will only increase over time as advertising budgets go increasingly to social campaigns. BlogFrog will be extending its reach into other markets such as tech and gaming. It just formed a major partnership with Meredith Publishing which selected BlogFrog as its influencer marketing platform of choice. The stakes are only going to get higher. But we need to be careful not to lose sight of why bloggers have become so important. It’s their independence that matters. If we forget that, bloggers will be viewed nothing more than shills for big corporations. |

| Bitly Announces Realtime, A Search Engine For Trending Links Posted: 28 Jul 2012 12:31 PM PDT  Today Bitly announced a new Bitly Labs project called Realtime, a service for finding the most clicked on Bitly links. Realtime, now in private beta, allows users to filter searches by social network, keyword, subject and more. For example, here are the results for a search for the keyword “startups” in technology on Twitter: As you can see, it’s not perfect yet (why is that article on hidden Mountain Lion features being displayed on a search for startups?). But with Bitly’s traction in URL shortening, this could become the go to reference for trending articles and sites. Bitly has been working on expanding its business beyond URL shortening in recent months and announced a new round of funding lead by Khosla Ventures earlier this month. In May the site launched a controversial redesign that recast the service as a bookmarking service rather than just a URL shortening service, and in January it launched an updated version of its enterprise dashboarding service. But the really big idea the company has been floating is a real-time social search engine. since at least October when the company launched a social search engine beta for its enterprise customers. But the company made it clear that it had bigger plans for search. Realtime, which so far is available to non-paying customers, seems to be the first step in realizing those plans. |

| Posted: 28 Jul 2012 12:00 PM PDT  The 20th century was owned and operated by middle men. Industry began as the creation of something for which would be traded other goods, services, or cash. As production centralized, distribution (as always) rose to close the distance between the product and the consumer. Facilitating consumption became a business unto itself: printing, shipping, packaging, and all the rest. A respectable, powerful, and necessary business. More recently, when certain products became capable of being distributed without this mighty infrastructure, that business ceased to become necessary, and correspondingly their power and respectability are now in decline. Words and media being the most portable data, the huge industries that have long facilitated their consumption are dying, slowly and poorly. How long before the present titans of technology find themselves in a similar position? It’s hard to imagine exactly how it will happen, but the trends are easy enough to extrapolate. The key to everything is decentralization. All the major players have benefited from it, though in different ways. Microsoft profited from the decentralization of PC hardware, from a few thousand mainframes and offices to hundreds of millions of households and individuals. Apple profited from the decentralization of software development, creating a platform on which creatives the world over compete to offer the biggest tithe. Google profited from the decentralization of advertising, and has financed its dativorous habits by what amounts to an unprecedented volume of microtransactions. That’s not to say that no one has benefited from centralization, of course: Amazon centralized retail, and companies like IBM and Oracle have centralized R&D and services. But you can always invert this equation and say that the companies did not centralize the world’s demand, but rather decentralized the supply. What emerges from this trend is a challenge: to identify the few things billion-dollar companies do or make today for which, in ten or even five years, they will still be necessary. This is a rolling challenge, of course, but there are a few inflection points, one of which I believe we’re experiencing. The gate will keep itselfThe question is crystallized in the recent controversy over whether Skype has a back door so the NSA can tap your conversations, or something vaguely equivalent that will provoke equivalent outrage. They probably do. But that’s not really relevant. What’s relevant is that it publicizes the fact that there’s really no reason to use Skype. That is to say, there’s no real reason to use Microsoft’s product over a (for now, theoretical) reasonably similar one. And not one made by a competitor, strictly speaking at least. Why does there need to be a company in between two devices speaking mutually intelligible data to each other over a secure connection? The same question, with a bit of modification, applies to any number of applications and services. Nowadays, if I want a book or song, there’s a good chance I can buy it online from the person who makes it, with no third party except the payment processor. Why should it be different with other kinds of data? Unless it is materially necessary for a company to touch that data (Google has a proper search algorithm, Facebook sorts through your friends’ updates), why should they? Why, in other words, should we have to tolerate the idea not just that Microsoft is able to snoop on our data, but that they have anything to do with that data at all? There’s a good, simple answer, of course. Companies like Microsoft actually produce and support the services we use and value. We pay them for the privilege of using them, and in some cases it is impossible to perform some tasks without them. Just like how in 1990, it was impossible to purchase a piece of music without giving a little to Tower Records, a little to Warner, and a little to half a dozen other little remorae on the body of the artist. What of the major labels and studios will survive ten years from today? They have excellent recording facilities, of course, experienced producers, and so on, and will no doubt live to produce and sell music for some time to come. But none of them will argue that this has not been a transformative period. Some of them have already been transformed into history. So, I think we will find, will be the case with major tech companies today, some of which only keep the lights on by squeezing every byte until money comes out. How long will they be able to reach those bytes? What absolutely critical functions do they perform? How long, for instance, will people continue to support the App Store? Developers want access to as many buyers as possible, just like retailers want a storefront with lots of foot traffic. Retailers pay a lot for a spot downtown, and developers pay for presence in the app store. But what happens when foot traffic starts picking up where rent is free? What happens when the region of unlocked, “free market” devices becomes as rich and desirable as the Rodeo Drive of the App Store? Chaos first, of course, but then profit. Profit always comes after chaos. How long will Google maintain its stranglehold on both the volume and speed of data storage and retrieval? Not forever, for a start. Localized tools for indexing and searching will be more important as fiefdoms of sites and services declare independence from Google — as, indeed, Twitter has already done (to mixed success, but it’s still early). Maps? Books? Web cache? Google is unquestionably the leader in storing these things today, but they know that whatever they accomplish, it’s only a head start in a marathon. Is it really so unthinkable that the efforts of a voluntary multitude could produce a Google-esque collection of data, mapping the virtual and real worlds and recording their history? It’s unthinkable to me that this would not emerge, given time. The tools in our hands are too powerful not to be applied to these problems. Cui bono?But these vague complaints don’t get quite to the heart of what I want to suggest. Specifically, what will happen soon is that certain companies and services will be made totally redundant by the emergence of free and ubiquitous tools and protocols. Why would you need a Microsoft video calling program when two computers can find each other on a crowdsourced DNS and send encrypted packets directly to one another based on a video interlink protocol designed and released for free by Swiss hackers? Why will you need a Google cloud syncing service that keeps your calendar up to date and available, and directs emails to whatever device you’re using, when that service made into a default feature on your router or PC or rented or public server space? Why will you use Facebook when you have a reliable and powerful way of keeping track of your friends’ latest pictures, updates, and location, but not tied to a company that wants to serve you ads with your data, and plays ball with the feds besides? They’re fantasies right now, of course — but the kind of fantasies that come true in a couple years, not the kind that live for centuries in storybooks. Like promises that you would someday be able to download a DVD in seconds: pie in the sky ten years ago, but today it’s a bandwidth buffet. Naturally, other elements of progress have made that old dream seem quaint, like sci-fi predictions of the present (downloading DVDs is kind of a silly thing to do, really), and the same will happen with today’s predictions, however else they may prove true. But the things these companies will be doing in a few years will be the things that only they can do. For Apple, that will be designing and making hardware — and to some extent, software, although they too will succumb in some areas to smaller, more maneuverable companies. Google will likely be able to tread water as a universal store of data and default service, but anonymizing (and increasingly, delocalizing) services, along with the further devaluation of traditional advertising, will clip its revenue wings. Microsoft will have a few proprietary high-value services it sells access to and which it jealously guards from duplication. But their feeling of entitlement over data (usually manifested in grotesquely overreaching terms of service) will cease to have any basis in reality. Not everyone will suffer. Who can do what Intel and ARM and Foxconn and Sony do? They’ll be fine for now, and will continue competing amongst themselves. They aren’t facing the existential threat that others are, at least not until home replicators can produce nanoarchitecture. And, importantly, interesting new ideas will continue to be built and expanded into new businesses, or new markets. Innovation grows in the cracks, like the grass on the sidewalk. And there’s never a shortage of cracks. Here's one growing right now. “One generation abandons the enterprises of another like stranded vessels,” says Thoreau. We abandon what we can, at any rate. And it is very likely that the vanguards of the web fleet are about to meet with considerable resistance, though to say they are stranded now or will be in ten years is certainly an exaggeration. But the part of their job that amounts to being data facilitators is about to come to a fairly abrupt end. If it can be replicated, it will be. If not now, soon. First for less, then for free. First for the bleeding edge, then for the trailing edge. Does that sound like one of those “big ifs” to you? Actually, it’s just this side of “when,” and that’s only because of the friction introduced to the system by patents and monopolies — and those always look so petty in hindsight. It’s not in the nature of technological progress to let such inconsequential obstacles bar its advance. And woe betide the fools and unfortunates who won’t (or can’t) get out of its way. |

| Kickstarter: Meet The Vers 1Q, A Stunning 2-inch Battery-Powered Bluetooth Speaker Posted: 28 Jul 2012 10:57 AM PDT  I’m in absolute love. From the gorgeous wood cabinet to the technical capabilities, the little Vers’ 1Q is simply perfect. The $120 price ($99 for Kickstarters) is just icing on the cake. It’s rather refreshing to see a warm, nearly alive device in our world that’s generally filled with modeled plastic and faux chrome trim. Simply put, the 1Q is a battery-powered Bluetooth speaker. A 2-inch driver provides the audio while, packed inside the walnut or bamboo casing, a 6.5W amp powers the audio provided from either Bluetooth or the 3.5mm jack. The included battery charges via microUSB and should last 10 hours on a charge. What more can you ask for from a small speaker? As shown by the pictures, the whole package is of a modest size. It fits in the hand, yet the creator brags that it “can easily deliver enough sound to fill some pretty large spaces.” Since it works with Bluetooth or an aux input, it should work with nearly every device. This isn’t Vers’ first consumer electronic device. The company already sells the Vers 1.5R radio/alarm, Vers 1E ear buds, and iPhone/iPad cases — all out of bamboo and walnut. The project is already funded on Kickstarter but they are still taking orders for 18 more days. Pledge $95 for a 1Q made out of either bamboo or walnut. Or, pledge an additional $30 for the limited edition red beech edition. Best yet the Vers promises these things will be delivered well before the holidays. |

| TechCrunch PSA: Olwimpics Blocker Blocks The Olympics Posted: 28 Jul 2012 10:19 AM PDT  If you’re like me, you never real got into spectator sports. Maybe it was the jock-induced swirlies or maybe it was the pointlessness of ball-based games, but I couldn’t give two shot puts about the Olympics. Thankfully, there’s the Olwinpics Blocker from FFFFF.at. This plugin turns all mentions of the Olympics into soothing, bright primary colors. It works on Chrome, Firefox, and Safari and works a treat. For example, the image above is how I see this post: Olympics, olympics. Olympics! The project took two hours to build but will save you days of olympic overdose pain. Olympics! |

| Posted: 28 Jul 2012 10:00 AM PDT  The Gillmor Gang — Robert Scoble, Dan Farber, Kevin Marks, John Taschek, and Steve Gillmor — killed some time waiting for NBC to let us watch the Olympics on our tablets and phones like the rest of the world. @dbfarber isn’t ready to write off Microsoft, but I can’t help wondering why Steve Sinofsky was content to duck a journalist’s question about the Windows Surface’s impact on hardware partners by pushing him toward the tablet with the suggestion he go learn something. With a week of Google Nexus 7 under our belts, a rumored deal between Apple and Twitter, and Mitt Money on his Insult Europe Tour ’12, we’re entering some good times as the world melts. Bring on the fiber; Kansas City here I come. @stevegillmor, @scobleizer, @dbfarber, @jtaschek, @kevinmarks Produced and directed by Tina Chase Gillmor @tinagillmor |

| You are subscribed to email updates from TechCrunch To stop receiving these emails, you may unsubscribe now. | Email delivery powered by Google |

| Google Inc., 20 West Kinzie, Chicago IL USA 60610 | |

0 comments:

Post a Comment